Linux驱动-内核互斥量mutex的实现

2024年7月 · 预计阅读时间: 3 分钟

“一图胜千言”

mutex内核结构体#

初始化 mutex 之后,就可以使用 mutex_lock 函数或其他衍生版本来获取信号量,使用 mutex_unlock函数释放信号量。

owner 有 2 个用途:

debug(CONFIG_DEBUG_MUTEXES)或spin_on_owner(CONFIG_MUTEX_SPIN_ON_OWNER),调试和优化。spin on owner:一个获得mutex的进程,可能很快就会释放掉 mutex;此时,当前获得 mutex 的进程是在别的 CPU 上运行、并且“我”是唯一等待这个 mutex 的进程。在这种情况下,那“我”就原地 spin 等待吧:懒得去休眠了,休眠又唤醒就太慢了。

mutex_lock函数的实现#

1.fastpath#

kernel/locking/mutex.c

这里,如果count=1,dec后为0,成功获得mutex,if判断不成立,直接返回;如果dec后count<0,则使用slowpath,休眠等待mutex。

2.slowpath#

如果mutex当前值是0或负数,则需要调用__mutex_lock_slowpath慢慢处理:可能会休眠等待。

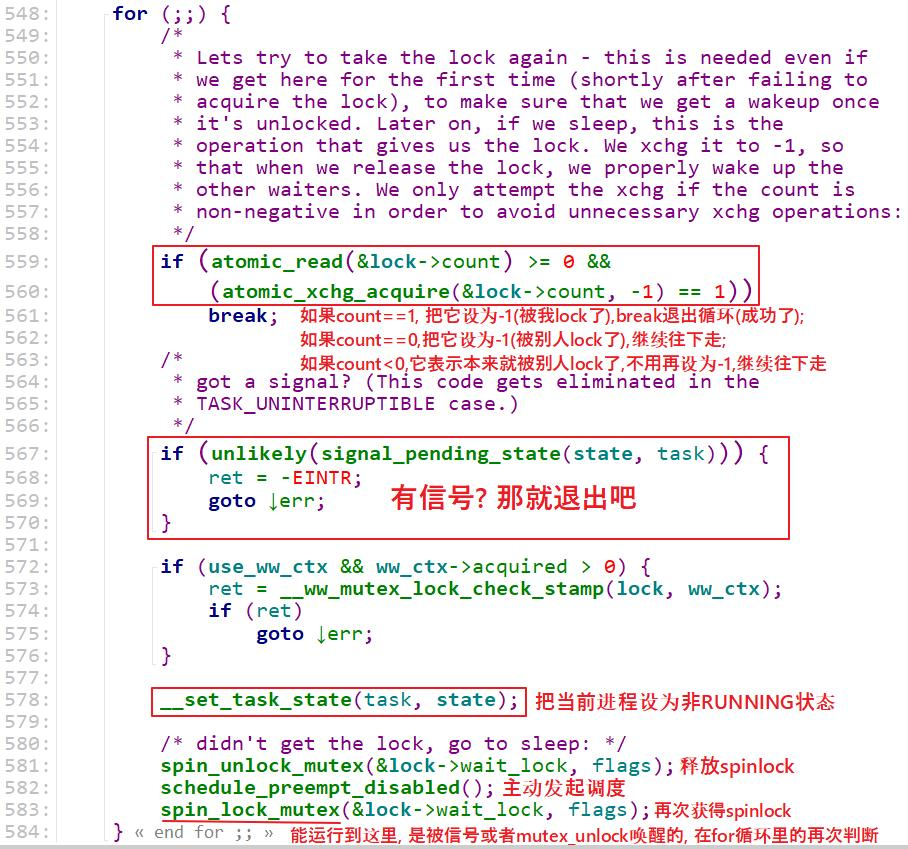

__mutex_lock_common函数代码比较长,这里看关键代码:

mutex_unlock函数的实现#

mutex_unlock函数中也有fastpath、slowpath两条路径(快速、慢速):如果fastpath成功,就不必使用slowpath。

代码如下:

1.fastpath#

先看看fastpath的函数:__mutex_fastpath_lock,这个函数在下面2个文件中都有定义:

include/asm-generic/mutex-xchg.h

include/asm-generic/mutex-dec.h

使用哪一个文件呢?看看arch/arm/include/asm/mutex.h,内容如下:

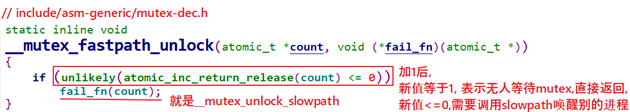

所以,对于ARMv6以下的架构,使用include/asm-generic/mutex-xchg.h中的__mutex_fastpath_lock函数;对于ARMv6及以上的架构,使用include/asm-generic/mutex-dec.h中的__mutex_fastpath_lock函数。这2个文件中的__mutex_fastpath_lock函数是类似的,mutex-dec.h中的代码如下:

大部分情况下,加1后mutex的值都是1,表示无人等待mutex,所以通过fastpath函数直接增加mutex的count值为1就可以了。

2.slowpath#

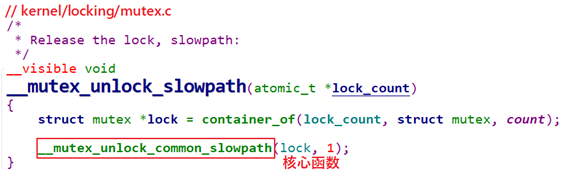

如果mutex的值加1后还是小于等于0,就表示有人在等待mutex,需要去wait_list把它取出唤醒,这需要用到slowpath的函数:__mutex_unlock_slowpath。

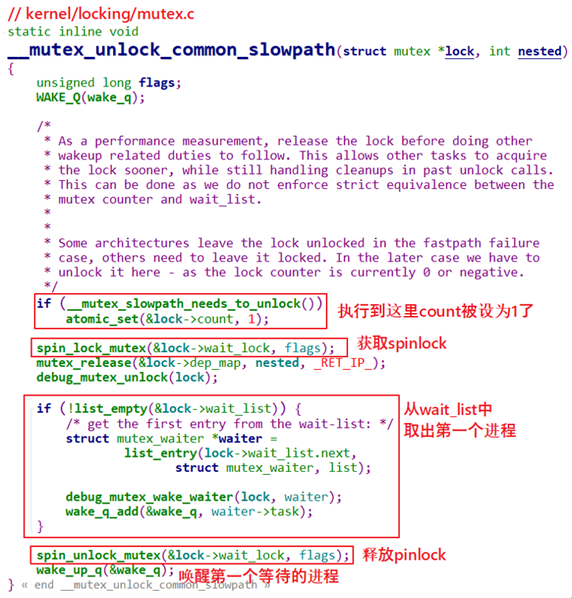

__mutex_unlock_common_slowpath函数代码如下,主要工作就是从wait_list中取出并唤醒第1个进程: